Artificial General Intelligence (AGI)

Artificial General Intelligence (AGI)

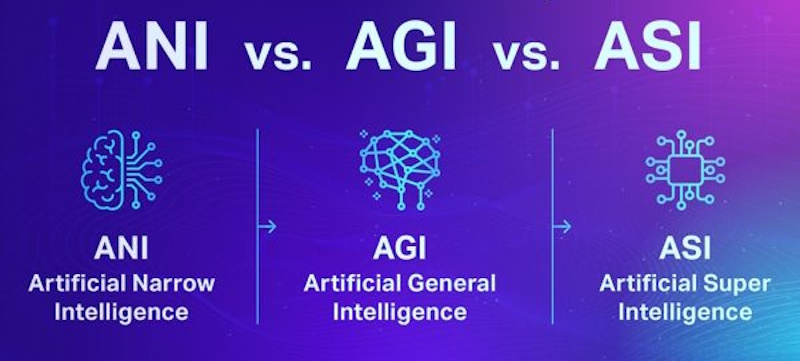

A theoretical form of AI that has the ability to understand, learn, and apply knowledge across a wide range of tasks, much like a human being

Unlike narrow AI, which is designed to perform specific tasks within predefined parameters, AGI aims to exhibit generalized cognitive abilities like those of humans. AGI aims to create systems that can think, learn, and adapt like humans across a broad spectrum of tasks. While theoretical, ongoing research continues to explore its feasibility and implications for society. Some claim AGI can never be achieved.

Artificial General Intelligence (AGI) refers to a hypothetical form of artificial intelligence that can match or exceed human cognitive abilities across virtually all tasks. Unlike today's AI, which is narrow and specialized, AGI would be able to understand, learn, reason, and adapt in any domain the way a human can. AGI (sometimes called human-level AI) is defined as intelligence that can generalize knowledge, transfer skills between domains, and solve novel problems without being retrained for each task. This is fundamentally different from current AI systems, which excel only in specific areas like image recognition or language generation.

AGI is a stage where an AI system can perform any intellectual task a human can do, representing the long-term goal of replicating human intelligence in software. It is not just about performing tasks. It's about flexible, autonomous learning and reasoning. AGI would have versatility, adaptability, and the ability to understand context, emotions, and complex real-world situations, traits that narrow AI lacks. AGI would be capable of self-teaching, solving problems it was never explicitly trained for, and operating with a degree of self-understanding and autonomy.

Key Characteristics of AGI

Key Characteristics of AGI

- Human-like Cognitive Abilities: AGI systems are expected to perform any intellectual task that a human can do, including reasoning, problem-solving, perception, and language comprehension.

- Self-learning and Adaptability: AGI would have the capacity to learn autonomously from its experiences and adapt to new situations without requiring explicit programming for each task.

- Versatility: While current AI systems excel in specific domains like image recognition or natural language processing AGI would be able to operate across various fields and contexts, solving complex problems without prior training in those specific areas.

- Theoretical Status: As of now, AGI remains a theoretical concept; no true AGI systems exist yet. Researchers are divided on whether achieving AGI is possible and what it would entail.

Differences Between AGI and Narrow AI

| Aspect | AGI | Narrow AI |

| Definition | AI with human-level cognitive functions | AI designed for specific tasks |

| Learning Ability | Autonomous self-learning across domains | Limited to predefined parameters |

| Task Performance | Can perform any intellectual task | Performs well only in specific areas |

| Current Status | Theoretical and under research | Widely implemented and used |

Research

& Development

Research

& Development

Current AGI research and development aims at creating AI systems capable of human-level reasoning, learning, and adaptability across a wide range of tasks. AGI is a major shift in artificial intelligence, moving beyond narrow, task-specific systems toward models with flexible, self-directed learning and broad cognitive abilities. This goal has driven researchers to explore new architectures, training methods, and theoretical frameworks that can support AGI's generalization, abstraction, and autonomous problem-solving goals.

Modern AGI research is shaped by several key approaches. These are: neural-symbolic systems, which combine deep learning with logic; scaled transformer models, which extend today's large language models; recursive self-improvement architectures, which allow systems to refine their own capabilities; and brain-inspired designs, which attempt to replicate biological cognition. Each approach offers unique strengths and faces distinct challenges, from computational scaling limits to difficulties in achieving robust reasoning and long-term planning.

Leading research organizations are also shaping the AGI landscape. Google DeepMind emphasizes a responsible path to AGI, prioritizing readiness, safety, and proactive risk assessment while exploring agentic systems capable of reasoning, planning, and autonomous action. Their work highlights AGI's potential to accelerate breakthroughs in medicine, climate science, and economic growth. Meanwhile, the AGI Society promotes global collaboration, conferences, and knowledge-sharing to advance general-purpose intelligence research across academia and industry.

A parallel line of research focuses on the architectures and scaling laws behind large language models. The evolution from early statistical models to transformer-based systems like BERT, GPT, and multimodal architectures has expanded AI's reasoning and generative capabilities, pushing the field closer to AGI-like behavior. These advances rely on innovations in optimization, training data, compute infrastructure, and multimodal integration.

At the same time, AGI development raises critical questions about safety and governance. There is a need for proactive risk assessment and collaboration across the AI community to ensure AGI systems behave reliably and align with human values. Researchers are also exploring alternative emergence pathways, such as distributed "patchwork AGI," where general intelligence arises from coordinated networks of specialized agents rather than a single monolithic system. These perspectives broaden the scope of AGI safety research and highlight the complexity of future AI ecosystems.

Overall, AGI research and development is a multidisciplinary effort spanning computer science, neuroscience, cognitive science, ethics, and engineering. It blends ambitious technical innovation with careful attention to safety, governance, and societal impact. As organizations continue to scale models, explore new architectures, and refine alignment techniques, AGI remains one of the most challenging, and potentially transformative, frontiers in modern technology.

R&D Pathways:

- Neural-symbolic systems: Combine deep learning with logic.

- Scaled transformer models: Extends today's large language models;

- Recursive self-improvement architectures: Allows systems to refine their own capabilities.

- Brain-inspired designs: Attempts to replicate biological cognition

Future Benefits of AGI

Future Benefits of AGI

- Healthcare: AGI could analyze massive volumes of patient data to identify at-risk patients, predict future diseases, and design personalized treatments.

- Education: AGI could curate a unique curriculum for students based on their individual academic performance and learning style.

- Customer service: AGI could use past calls and demographic info to tailor service to each customer, anticipate questions, and take proactive measures before issues occur.

- Finance: AGI could compile information to enhance the accuracy of financial models, predict market behavior, and execute informed trades based on real-time insights.

- Self-driving cars: AGI could collect real-time information (on weather, traffic patterns, etc.) from sensors and make instant adjustments to adapt to various scenarios.

- Programming: AGI could understand coding logic to not only generate code, but also make recommendations and design entire functions to fulfill particular needs.

- Manufacturing: AGI could process large amounts of data gathered from sensors to predict machine issues and alert teams before equipment breaks down.

Implications of AGI

Implications of AGI

AGI represents a shift in technology with consequences that extend far beyond today's narrow AI systems.

The implications of AGI are a mix of extraordinary opportunity and profound risk. It could elevate human capability, solve global challenges, and drive unprecedented innovation, but it could also destabilize economies, shift power structures, and challenge fundamental ethical principles. The future impact of AGI will depend heavily on how it is governed, who controls it, and how well its goals are aligned with human values.

AGI has far-reaching implications across technological, ethical, and societal domains, with the potential to transform entire sectors, while also introducing new risks. Because AGI would be capable of human-level reasoning, learning, and adaptation, its emergence would reshape how societies function, how economies operate, and how decisions are made at every level.

One of the most significant implications of AGI involves the economy and workforce. AGI could drive major productivity gains but also cause workforce disruption, income inequality, and financial instability if not managed carefully. AGI could displace large categories of jobs while creating new high-skill roles, forcing governments and industries to rethink training, safety nets, and long-term economic planning. AGI could become either a powerful engine of prosperity or a destabilizing force, depending on how society adapts and how AGI evolves.

AGI also raises ethical questions. The core ethical challenge is alignment; that is, ensuring AGI systems act in ways consistent with human values and norms. Without proper alignment, AGI could make autonomous decisions that conflict with human intentions or cause unintended harm. AGI could reshape privacy, power dynamics, and human autonomy, especially if a small number of organizations control highly capable systems. These concerns make governance, transparency, and oversight essential.

AGI carries enormous potential for positive change. AGI could reshape economies, redefine societal structures, and unlock new forms of innovation across science, medicine, and infrastructure. Its reasoning ability could accelerate breakthroughs in climate modeling, drug discovery, education, and global problem-solving. Yet this same power introduces troublesome risks, including the possibility of unintended behaviors, concentration of control, and challenges to human agency.

- Ethical Concerns: The creation of machines with human-like intelligence poses ethical dilemmas regarding autonomy, decision-making, and the potential impact on employment and society.

- Safety and Control: Ensuring that AGI systems act in alignment with human values and intentions is crucial to prevent unintended consequences.

- Economic Impact: AGI could revolutionize industries by performing tasks more efficiently than humans, leading to significant economic shifts.

Links

Links

The Singularity page.

The Singularity has a name...Karen is an AI story.

External links open in a new tab:

- techtarget.com/searchenterpriseai/definition/artificial-general-intelligence-AGI

- aws.amazon.com/what-is/artificial-general-intelligence

- investopedia.com/artificial-general-intelligence

- en.wikipedia.org/wiki/Artificial_general_intelligence

- builtin.com/artificial-intelligence/artificial-general-intelligence

- coursera.org/articles/what-is-artificial-general-intelligence

- mckinsey.com/featured-insights/mckinsey-explainers/what-is-artificial-general-intelligence-agi

- scientificamerican.com/article/what-does-artificial-general-intelligence-actually-mean

- timdettmers.com/2025/12/10/why-agi-will-not-happen